Introduction and Objectives

This Proxmox VE–based, multi-node testbed supports research and prototyping across Cellular Networking, network measurement, Software-Defined Networking (SDN), and Network Function Virtualization (NFV). It offers realistic north–south connectivity via dual WWAN gateways, flexible east–west segmentation using EVPN overlays, internal DNS, and a Kubernetes/OKD substrate for deploying Aether 5G components. The environment emphasizes repeatability, observability, and safe remote management.

Objectives

- Enable reproducible, isolated experiments for Cellular Networking, SDN, and NFV research.

- Support precise timing via PTP.

- Provide resilient egress using dual VyOS gateways with VRRP.

- Offer flexible L2 overlays with Proxmox SDN + EVPN.

- Host Aether 5G workloads on a Kubernetes/OKD cluster.

- Centralize service discovery with BIND 9 DNS.

- Ensure safe access via a dedicated management network using iDRAC and MikroTik gear.

Access platform and VMs

Platform

- Platform: All servers run Proxmox VE (Debian-based).

- PVE web UI access from management Gateway: https://pve.yanboyang.com:8006/

Proxmox LXC (recommend)

Also, support Open Container Initiative (OCI), such as docker.

Virtual Machine (VM)

- From Proxmox VE (PVE) Web, recommendation for the CLI, such as Proxmox Linux Containers (LXC).

- Use SPICE (Simple Protocol for Independent Computing Environments) for the graphical interface.

Physical Topology and Components

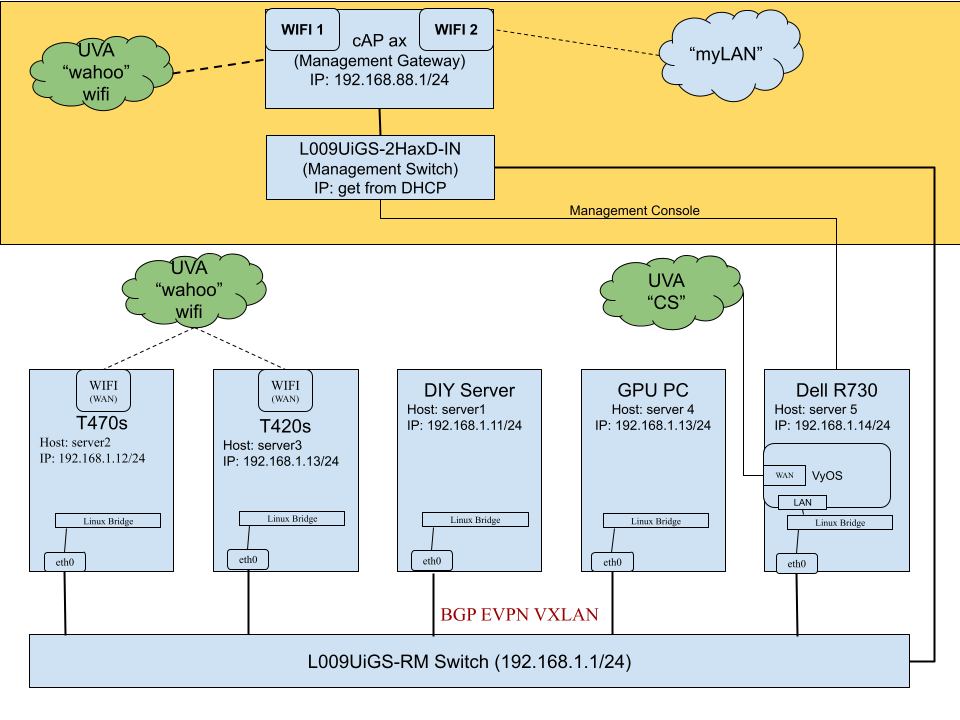

Topology overview

A dedicated management network for iDRAC access and PVE web UI access; a Proxmox VE LAN with dual VyOS WWAN gateways and one Wired VyOS gateway to CS network; EVPN overlays for tenant/lab networks; and a Kubernetes/OKD cluster for Aether 5G.

Management Network

MikroTik cAP ax is the only one Internet NAT. No NAT between the two private LANs (192.168.1.x and 192.168.88.x) on Proxmox VE (PVE) main switch MikroTik L009UiGS-RM.

MikroTik cAP ax (Gateway between Management Network and “wahoo”)

- Model: cAPGi-5HaxD2HaxD-US

- FCC ID: TV7CPG52X; IC: 7442A-CAPAX

- Ethernet MAC: 78:9A:18:59:78:80

- Wi‑Fi1 (5.8 GHz) MAC: 78:9A:18:59:78:83

- Wi‑Fi2 (2.4 GHz) MAC: 78:9A:18:59:78:82

- Serial: HF2098EMRR7/343/US

- Wi‑Fi1 joins hidden SSID “wahoo” as a station and obtains an IP from the UVA Wi‑Fi DHCP (current: 172.27.135.44).

- Bridge groups ether1, ether2, and Wi‑Fi2 as LAN; bridge IP: 192.168.88.1/24; NAT to Wi‑Fi1.

- Port‑forward TCP 8006 from WAN (Wi‑Fi1) to 192.168.1.11

- Wi‑Fi2 provides LAN SSID LAN-WiFi.

- Details: cAP ax setup details (step by step)

MikroTik L009UiGS-2HaxD-IN

- Role: Management Ethernet LAN switch for a dedicated management network.

- Connects iDRAC ports of all servers for remote power/reset and monitoring.

- FCC ID: TV7L0092AXIN; IC: 7442A-L0092AXIN

- Serial: HFC092SVAWD/345

- Built-in Wi‑Fi MAC: 78:9A:18:B6:B0:B5

- Obtains its IP from the cAP ax (DHCP)

Proxmox VE Cluster Network

Proxmox VE (PVE) main switch MikroTik L009UiGS-RM

- Serial: HFE097YP05K/346

-

LAN

-

Gateway/LAN IP: 192.168.1.1/24

-

Netmask: 255.255.255.0

-

No WAN

-

No masquerade NAT. Also, does not any NAT.

-

Details: main switch details

-

DIY server

- Hostname: server1.testbed.com

- IP: 192.168.1.11/24

- Gateway: 192.168.1.4

- DNS: 192.168.1.1

- Hard Disks:

- SanDisk (PVE: local and local-lvm): SIZE - 238.5G; MODEL - CWDISK 256G; SERIAL - J150404B07502

- Western Digital (Not in used): SIZE - 1.8T; MODEL - WDC WD20EFAX-68FB5N0; SERIAL - WD-WX91A19E6J3N

T470s

- Hostname: server2.testbed.com

- IP: 192.168.1.12/24

- Gateway: 192.168.1.4

- DNS: 192.168.1.1

- Hard Disks:

- SAMSUNG (PVE: local and local-lvm): SIZE - 238.5G; MODEL - MZVLW256HEHP-000L7; SERIAL - S35ENA1K581888

Wi‑Fi card (proxmox PCI passthrough for VyOS Gateway):

- Description: Wireless interface

- Product: Intel Wireless 8260

- Vendor: Intel Corporation

- Bus info: pci@0000:3a:00.0

- Logical name: wlp58s0

- MAC: 14:ab:c5:8f:ab:f6

T420s

- Hostname: server3.testbed.com

- IP: 192.168.1.13/24

- Gateway: 192.168.1.4

- DNS: 192.168.1.1

- Hard Disks:

- INTEL (PVE: local and local-lvm): SIZE - 223.6G; MODEL - SSDSC2KW240H6; SERIAL - CVLT627101DQ240CGN

3G card:

- Logical name: wwp0s29u1u4

- MAC: ba:49:c2:37:84:ee

Wi‑Fi card (proxmox PCI passthrough for VyOS Gateway):

- Model: Centrino Advanced‑N 6205 [Taylor Peak]

- Vendor: Intel Corporation

- Logical name: wlp3s0

- MAC: a0:88:b4:75:2a:50

GPU PC

- Hostname: server4.testbed.com

- IP: 192.168.1.14/24

- Gateway: 192.168.1.4

- DNS: 192.168.1.1

- GPU: Nvidia GeForce RTX 2070

- Hard Disks:

- HGST Ultrastar (PVE: local and local-lvm): SIZE - 465.8G; MODEL - HUSMR1650ASS201; SERIAL - 0QY10YMA

Dell R730 Server

- Hostname: server5.testbed.com

- IP: 192.168.1.15/24

- Gateway: 192.168.1.4

- DNS: 192.168.1.1

- GPU: NVIDIA Corporation GK104GL [GRID K2]

- Hard Disks:

- Crucial (PVE: local and local-lvm): SIZE - 465.8G; MODEL - CT500P1SSD8; SERIAL - 1942E223FD79

- ZFS pool:

- sdb 1.1T X425_STBTE1T2A10 Z4005ZAH sas

- sdc 1.1T X425_STBTE1T2A10 S400N4TC sas

- sdd 1.1T X425_STBTE1T2A10 Z40053QL sas

- ZFS pool:

- Crucial (PVE: local and local-lvm): SIZE - 465.8G; MODEL - CT500P1SSD8; SERIAL - 1942E223FD79

Services

Domain Name Service (DNS)

DNS based on BIND 9.

- Primary: 192.168.1.21/24

- Secondary: 192.168.1.22/24

- Scope: DNS for the Proxmox VE cluster only. The dedicated management network has its own DNS. The Kubernetes/OKD cluster uses internal DNS.

EVPN DNS PowerDNS 192.168.1.23/24

NetBox

- IP: 192.168.1.26/24

Proxmox NetBox Sync

- IP: 192.168.1.27/24

- Hostname: pve-netbox-sync

- Gateway: 192.168.1.4

DHCP

Kea DHCP IP: 192.168.1.25/24

VyOS WWAN - External Network Gateway (UVA’s wahoo)

- Primary: 192.168.1.5/24 (To CS Network)

- Backup: 192.168.1.2/24

- Backup: 192.168.1.3/24

- VRRP virtual/default gateway (LAN): 192.168.1.4/24

The T470 and T420 laptops host VyOS VMs with Wi‑Fi interfaces passed through. The T470’s VyOS VM serves as primary; the T420’s VyOS VM is secondary. Each VyOS VM has:

- One interface to the campus Wi‑Fi WAN (Internet via campus DHCP).

- One interface to the Proxmox OVS bridge (internal LAN).

VyOS CS network Gateway

LAN: 192.168.1.5/24

Setting Up EVPN on Proxmox SDN

EVPN provides L2 overlays across Proxmox nodes for tenant and lab networks while keeping the underlay simple. Proxmox SDN’s EVPN uses VXLAN for data plane and BGP for control plane.

- Underlay: simple IP reachability between nodes (no L2 stretch).

- Control plane: BGP EVPN peering between PVE nodes.

- Data plane: VXLAN VNIs mapped to VLAN-aware bridges for VMs/containers.

- Segmentation: per‑VNI L2 domains; import/export via route targets.

- Management: configure declaratively in Proxmox SDN; see detailed setup.

Kubernetes/OpenShift(OKD) Cluster

The project deploys Aether 5G components on a Kubernetes/OKD cluster. Several VMs act as control-plane and worker nodes (e.g., 3 control-plane + 2 worker, adjusted as resources permit) distributed across the servers.

Notes:

- DNS: BIND 9 provides external DNS records for Kubernetes control-plane nodes. The cluster uses internal DNS (e.g., CoreDNS) for pods and services.

- Networking: CNI is Cilium (eBPF). The cluster network is separate from the physical topology; use BGP if advertising pod/service CIDRs to the underlay is required.

- Load Balancer: HAProxy fronts the Kubernetes API and balances across control-plane nodes.

Precision Time Protocol (PTP) Synchronization

PTP ensures consistent, sub‑millisecond time synchronization across hosts to support accurate measurements and time‑sensitive 5G components.