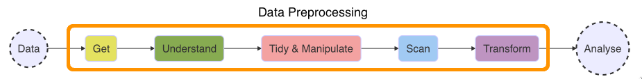

We will define 5 major tasks for data preprocessing framework, namely: Get, Understand, Tidy & Manipulate, Scan and Transform.

A typical data preprocessing process usually (but not necessarily) follows the following order of tasks given below:

- Get: A data set can be stored in a computer or can be online in different file formats. We need to get the data set into R by importing it from other data sources (i.e., .txt, .xls, .csv files and databases) or scraping from web. R provides many useful commands to import (and export) data sets in different file formats.

- Understand: We cannot perform any type of data preprocessing without understanding what we have in hand. In this step, we will check the data volume (i.e., the dimensions of the data) and structure, understand the variables/attributes in the data set, and understand the meaning of each level/value for the variables.

- Tidy & Manipulate: In this step, we will apply several important tasks to tidy up messy data sets. We will follow Hadley Wickham’s “Tidy Data” principles:

- Each variable must have its own column.

- Each observation must have its own row.

- Each value must have its own cell.

- We may also need to manipulate, i.e. filter, arrange, select, subset/split data, or generate new variables from the data set.

- Scan: This step will include checking for plausibility of values, cleaning data for obvious errors, identifying and handling outliers, and dealing with missing values.

- Transform: Some statistical analysis methods are sensitive to the scale of the variables and it may be necessary to apply transformations before using them. In this step we will introduce well-known data transformations, data scaling, centering, standardising and normalising methods.

There are also other steps related with preprocessing special types of data including dates, time and characters/strings. The last module of this course will introduce the special operations used for date, time and text preprocessing.