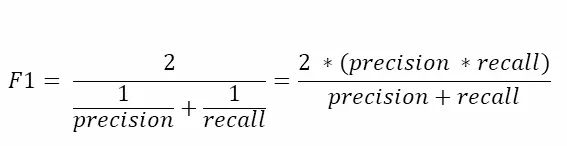

The f1 score is the harmonic mean of recall and precision, with a higher score as a better model. The f1 score is calculated using the following formula:

We can obtain the f1 score from scikit-learn, which takes as inputs the actual labels and the predicted labels

We can obtain the f1 score from scikit-learn, which takes as inputs the actual labels and the predicted labels

from sklearn.metrics import f1_score

f1_score(df.actual_label.values, df.predicted_RF.values)How do we assess a model if we haven’t picked a threshold? One very common method is using the receiver operating characteristic (ROC) curve and roc auc score.